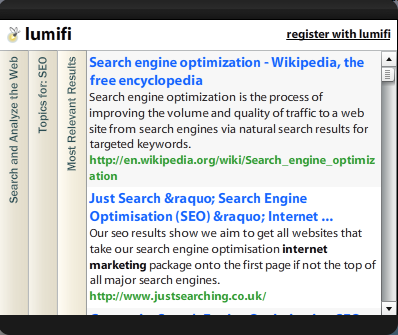

There's a new search engine being showcased on Apple.com's widget download page, lumifi , which, unlike other search engines, does not run through a web browser. With web search being so integral to our computer lives, is it preferable to search through a website or an application?

Lumifi's marketing department provides one answer :

"lumifi is different than Google and other search engines in that it reads each search result for you to determine what is actually relevant to your research rather than what happens to be popular at the moment."

But obviously other search engines do relevance tests-determining relevance is their main purpose. Perhaps they mean that lumifi does some sort of post-processing of standard search results; basically a double-search. Their program hits Google, or some other search engine, then filters and returns the reordered results.

The problem is the results are pretty lousy. Google's algorithm is great at detecting spam, but not if you reorder its results with another algorithm. Spam detection works by changing the order of results, but running the lower quality algorithm afterwards cancels out its effectiveness. Maybe lumifi does its search from scratch; in which case larger search engines would still have better algorithms.

While I'm not a huge fan of lumifi, it presents an interesting question: why do we prefer to use a web-based search engine instead of using a separate application?

The two deciding factors: quality of search results and convenience.

A standalone application has more power and versatility than one based on the web. For example, lumifi has a nice feature which allows you to use its search engine on text files on your computer. This is only possible because it runs on your local computer; if it had to connect to the internet, the bandwidth costs would be enormous, not to mention security issues.

Besides being more powerful, standalone applications in general can be a better option than their web counterparts because the user doesn't need an internet connection. But with web search applications the necessity is a given. Search result databases are enormous and perpetually changing-the large file sizes or constant updates necessary to maintain an entirely local application would be intolerable on the user's end.

Web search will always be more popular as a web application because of the integration. When you search for a website, the ultimate goal is to find a link, click on it, and be whisked away to the site. With a standalone app, when a user clicks a link, what would happen? Would they be bounced between the search app and their web browser?

There's the solution lumifi went with: a built in browser. But this option has several disadvantages. First of all, users are familiar with their web browser and will be reluctant to change (which is why we still put up with IE 6). Second, and more importantly, even if you can convince users to download and use your browser, now you're spread too thin-making a competitive search algorithm that is forever evolving to stifle black-hat methods AND developing a web browser that keeps up with web standards. A fully integrated search-browsing platform is a lot to have on your plate.

Integrating the search application with a web browser is such a daunting task, it could only be done with a huge effort, which would ultimately be in vain. Since the application would reside locally, that means the algorithm would be local as well. Even if you obfuscate the code, it could be hacked, and your algorithm made worthless.

In the long run I don't foresee client-side, standalone applications to be a dominant player in web search. If anyone is going to overtake Google, it will definitely be another web based search engine.

Lumifi is Flash-based rather than JavaScript-based; but, it is not a standalone application. Lumifi does indeed connect to the Internet which is where all the algorithms run. Lumifi was developed using Adobe Flex.

Lumifi is not a search engine – our public offering, http://www.lumifi.com, leverages a search feed, GigaBlast. We restrict our analysis to the first 50 results returned by GigaBlast, which is why the results shown is less than Google.

What we do is add value to a search engine by reading all the results and doing statistical analysis on them. So, if you searched for “american revolution”, for example, we take the first 50 pages that the search engine returns and then we read each page to determine which topics within those pages are statistically relevant. In the case of “american revolution”, we see topics like “articles of confederation”, “benedict arnold”, “benjamin franklin”, “boston tea party”, etc.

What this means is that these topics appear with a high frequency across all pages found by the search engine. The search engine could be anything that conforms to our XML specification from Lexus Nexus and Pub Med to private archives and libraries.

As you mentioned, lumifi can also analyze uploaded documents to find statistically relevant terms in them; and, we can crawl websites several levels deep to do the same.

The lumifi application only starts with this statistical/contextual analysis. It is essentially an authoring application to compile research, annotate it, collaboratively refine it, and then publish the results. This end publication then becomes fodder for more analysis effecting a feedback loop that reflects the iterative, winnowing nature of research.

Feel free to contact me, Jeff Ploughman, at jploughman@lumifi.com if you have questions.

Hi Jeff,

Thanks for clearing up my mistakes on lumifi. I would have liked to discuss more aspects of lumifi in this post. In addition to the collaboration tools, I neglected to mention another of lumifi’s nice features: extensive content highlighting options. That is one of the reasons I linked to the video of lumifi in action.

-jr

*Note – I changed the title and a bit of the language in the first two paragraphs based on the last comment.

LUMIFI was a really, really bad product and that is why it is no longer!